Service Mesh VS API Gateway VS Message Queue - when to use what?

Let's skip the pitch for microservices - you already know what they are and why they make sense. In fact, few topics have received as much coverage in recent years as the unsurprising fact that breaking down a big thing into many small ones can make it easier to handle.

The trouble is: once we've shattered our monolith, how do we put it back together into a larger system that still makes sense? Despite what Istio, Kong or Kafka enthusiasts will tell you, there's more than one answer to this question and different solutions are differently suited for different needs.

This post aims to shed some light onto the various ways to organize communication amongst microservices and when a Service Mesh, an API Gateway or a Message Queue might be the best solution for your needs.

But before we talk about the solution, let's talk about the problem:

So - what's the problem?

To function properly, microservice-based architectures have to tackle a number of challenges specific to their distributed nature:

Resiliency

There might be dozens or even hundreds of instances of any given microservice - each of which might fail at any point in time for any number of reasons.

Load Balancing & Auto-Scaling

With potentially hundreds of endpoints capable of fulfilling a request, routing and scaling are anything but trivial. In fact, one of the most effective cost-saving measures for large architectures is to increase the precision of routing and scaling decisions.

Service Discovery

The more complex and distributed an application, the harder it becomes to find existing endpoints and to establish a communication channel with them.

Tracing & Monitoring

A single transaction in a microservice architecture might travel through multiple services, making it hard to trace its journey.

Versioning

As systems mature it becomes paramount to update available endpoints and APIs while simultaneously ensuring that older versions remain available.

The solutions

Alright, time to meet the contenders for solving these problems: Service Meshes, API Gateways, and Message Queues. Of course, there's also a number of other approaches, ranging from simple static load balancing and fixed IPs to central orchestration servers - but for the purpose of this post, let's look at the currently most popular and in many ways most sophisticated options.

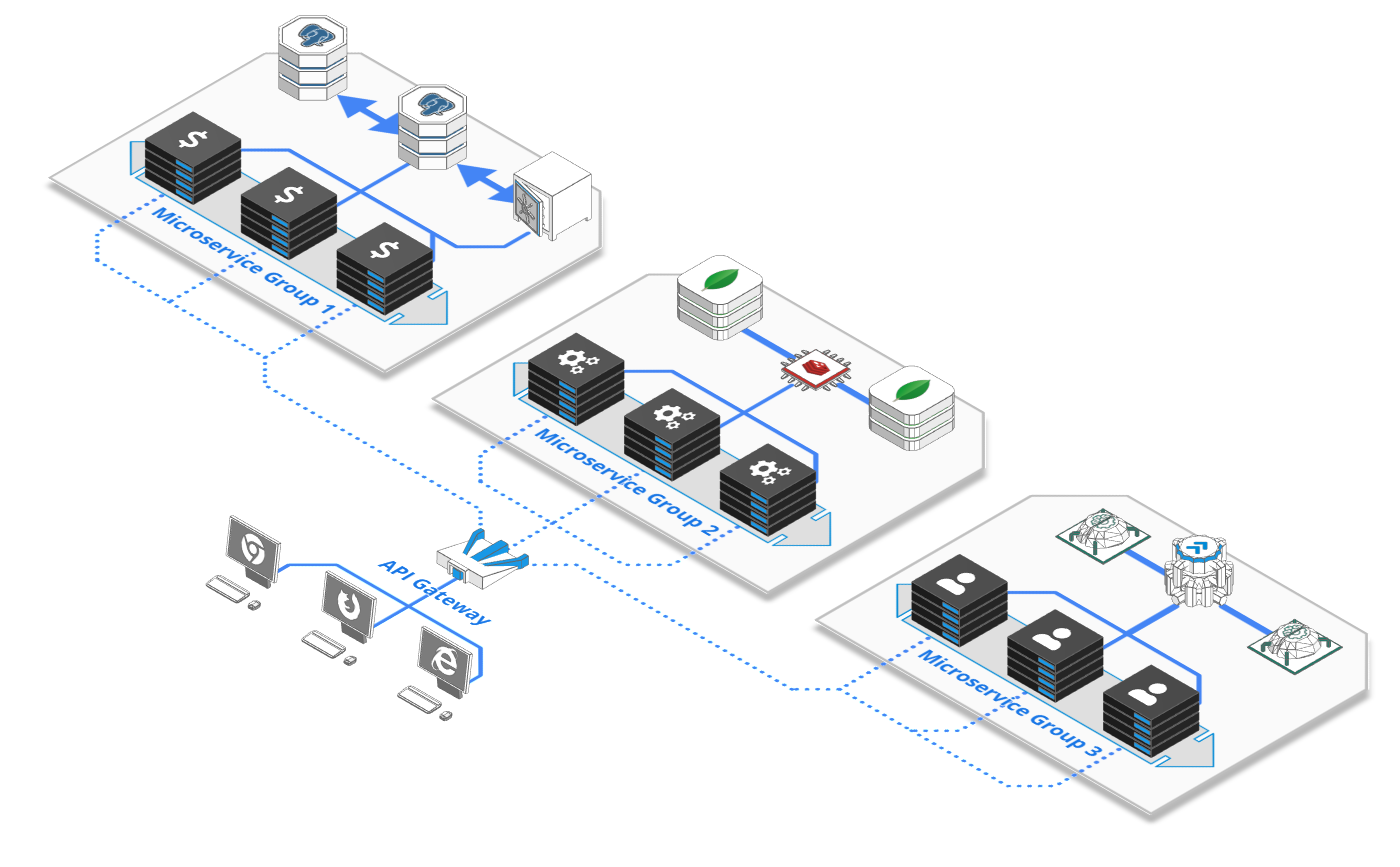

API Gateways

Benefits

API Gateways are powerful in features, comparatively low in complexity and easily understood by seasoned web-veterans. They provide a solid layer of defense against the public internet and offload a lot of repetitive tasks, such as user authentication or data validation.

Downsides

API Gateways are fairly centralized. They can be deployed in a horizontally scalable fashion, but unlike service meshes, they still require a single point to register new APIs or change configuration. Seen from an organizational perspective, they are likely to be maintained by a single team

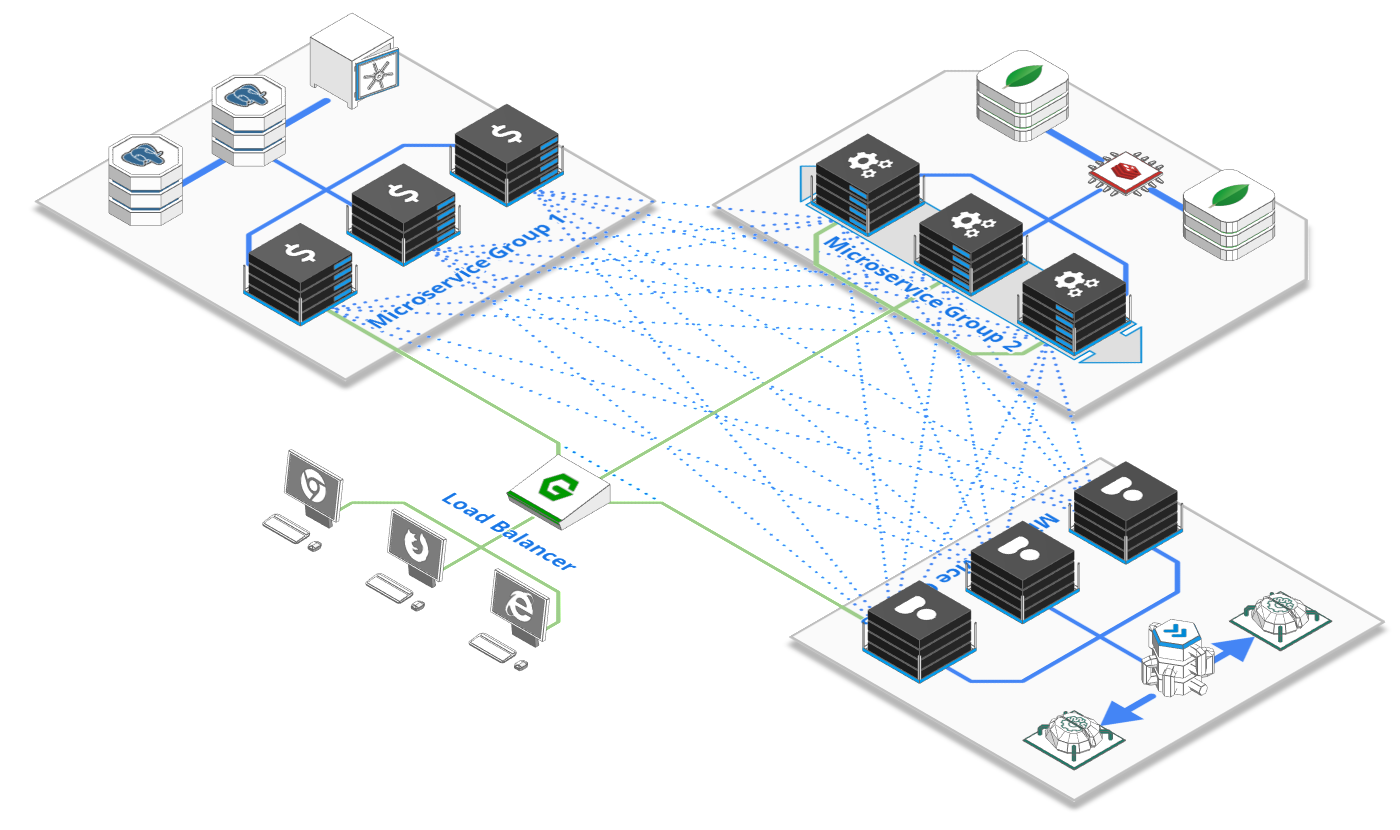

Service Meshes

Benefits

Service meshes are more dynamic and can easily shift shape and accommodate new functionalities and endpoints. Their decentralized nature makes it easier to work on micro-services within fairly isolated teams

Downsides

Service meshes can be quite complex and require a lot of moving parts. Fully utilizing Istio, for instance, requires the deployment of a separate traffic manager, a telemetry gatherer, a certificate manager and a sidecar process for each node. They are also a fairly recent development, making something that constitutes the very backbone of your architecture worryingly young.

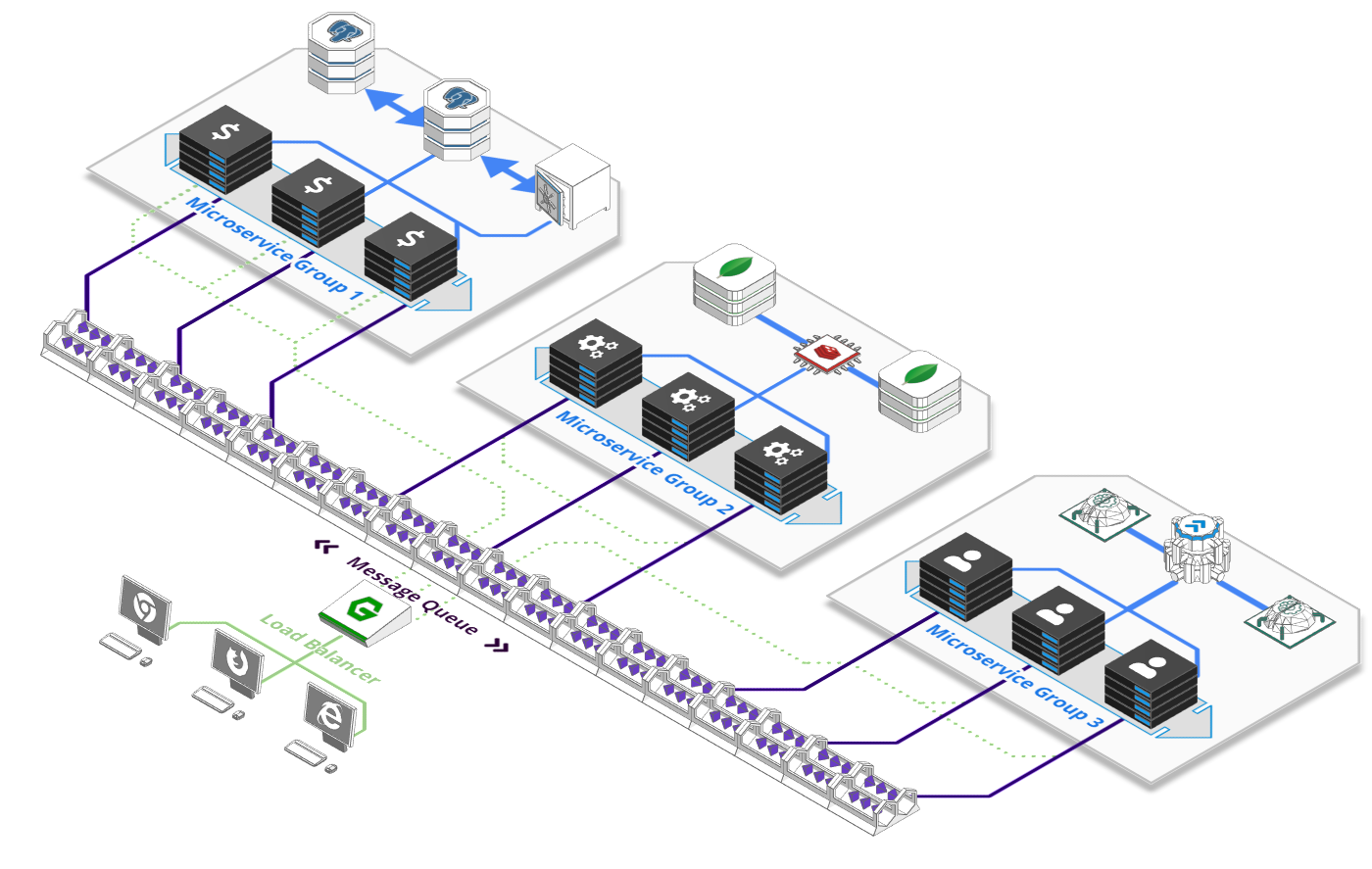

Message Queues

Message Queues allow you to establish complex communication patterns amongst services by decoupling sender and receiver They achieve this using a number of concepts, such as topic-based routing or publish-subscribe messaging, as well as buffered task queues that make it easy for multiple instances to process different aspects of a task over time.

Message Queues have been around for ages, resulting in a wide selection to choose from: Popular open source alternatives include Apache Kafka, AMQP Broker like RabbitMQ or HornetQ and Cloud Provider versions like AWS SQS or Kinesis, Google PubSub or Azure Service Bus.

Benefits

Simply decoupling sender and receiver is a potent concept that makes a number of other concepts such as health checks, routing, endpoint discovery or load balancing unnecessary. Instances can pick relevant tasks from a buffered queue as and when they are ready to do so. This becomes especially powerful when auto-orchestration and scaling decisions are based on the message count in each queue, leading to highly resource efficient systems.

Downsides

Message Queues are not good at request/response communication. Some allow this to be shoehorned on top of existing concepts, but its not really what they are made for. Due to their buffered nature, they can also add significant latency to a system. They are also fairly centralized (though horizontally scalable) and can be quite costly to run at scale

So - when to choose which?

Actually - this is not necessarily an either/or decision. In fact, it can make perfect sense to front ones public facing API with an API gateway, run a service mesh to handle inter-service communication and back things with a message queue for asynchronous task scheduling.

But if we reduce the focus purely to inter-service communication one possible answer could be:

- If you already run an API Gateway for your public facing API, you might as well keep complexity low and reuse it for inter-service communication

- If you work within a large organization with siloed teams and poor communication, a service mesh can give you the highest degree of independence, making it easy to add new services over time.

- If you are designing a system where individual steps are spaced out over time, e.g. a youtube like service where upload, processing, and publishing of videos can take a couple of minutes, use a message or task queue.

What the future holds

Despite all the hype, service meshes are a fairly young concept with e.g. Istio, the most popular alternative only having reached its 1.0 version in July 2018. My prediction would be that these concepts increasingly merge, resulting in a more decentralized mesh of services providing both external API access and internal communication - maybe even in a buffered, queue-like fashion.